Day 1: ICML 2021

This was the first time I had attended a full blown major conference. For those of you who are unaware ICML stands for International Conference On Machine Learning and is the third largest AI-ML conference in the world. The conference has three types of technical events:

- Talk Panel/Poster presentation - This is either in the form of 30 min talks or through virtual interaction in a virtual room on “gather”.

- Workshops - These are sessions where a team presents their work, its applications and walk you through its uses in real life with examples.

- Tutorials - These are demo sessions where the presenter shows a demo of their working product and usually share a copy of the code for the audience to test/tinker with.

Some of the spots in these events are reserved for the sponsors (usually tech megacorps/startups flush with cash who are doing work in cutting edge AI). These events have an Expo tag next to it and today was Expo Day.

I attended one Expo Workshop by Microsoft (4.5 hrs) and one Expo Talk Panel by IBM (0.5 hr) today, on very different and very interesting topics. These notes are in the order of interest in the topic and are mostly just “notes”. Wherever possible, I will add my commentary on the topic!

Expo Talk Panel - IBM Analog Hardware Acceleration Kit

This is a really cool piece of tech being developed by IBM for fast and low power training of small and medium sized neural networks in a completely analog way. First of all the links shared with us:

- Landing page: https://analog-ai.mybluemix.net

- Github page: https://github.com/IBM/aihwkit

- Documentation page: https://aihwkit.readthedocs.io/en/latest/

- AI hardware center webpage to learn more about other projects in the space of AI acceleration: https://www.research.ibm.com/artificial-intelligence/ai-hardware-center/

Before discussing what is analog computing, we need to understand why it helps.

- 1000x improvement in speed (without reducing precision)! This is by far the biggest factor. This speed improvement comes mainly from the efficiency of matrix multiplication in the analog domain with help from laws of physics. This eliminates the Von-Neumann architecture (in CPU’s) bottlenecks and allows computation directly in memory.

- Much lower power consumption. No requirement of accessing DRAM, need not keep moving data (from storage to RAM to the caches and back and forth between RAM and cache).

With that out of the way, lets discuss how it works: (Note: I didn’t clearly understand the device physics but here it goes)

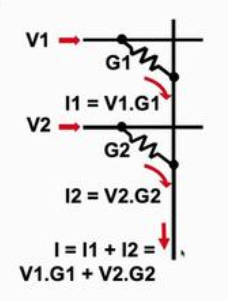

As is visible in the diagram, if one set of voltages are used to tune the conductance (G) (weights) and another set of voltage are used as inputs, we can just take the output current of the structure to get the dot product of the input with weight! Pretty cool right!

If this is done on a grid of such conductors, we get and array and matrix multiplication or matrix-matrix multiplication. This makes the calculation of matrix products almost instantaneous across the whole matrix saving lot of time. Also, since the conductance is supplied with a separate source, the weights are essentially embedded in them!

Such a structure is called a crossbar array and each conductance (G) is a resistive processing unit.

An obvious question is, how do we represent negative weights? Since negative resistance is not a reality (yet), the authors propose using a pair of crossbar arrays for each neuron and taking the difference in their output for the value of the neuron. This gives us a differential conductance pair (G+ and G-). This network is called an NVM array (don’t know the expanded name)

The conductance can be provided using a variety of “devices” such as:

- PCM (Phase change memory) which transitions between crystalline and amorphous states

- RPAM

- ECRAM

- CMOS

- Photonics where ring resonators (to boost?) are used followed by photo detectors.

Based on these, they developed an open source AI Hardware Kit which simulates how the network will learn in hardware for different “devices” and shows plots of predicted hardware behaviour. This kit is completely compatible with PyTorch (yay!) and is optimized for GPUs as well (CUDnn)

From the QnA:

- Effects of temperature changes on the stability of training/inference (since voltages and currents are prone to thermal noise): “… So far our preliminary work indicates that we can compensate for temperature dependence of device conductance - and have demonstrate minimal accuracy loss across small to medium MLP, and CNN networks”

- Advantage of Analog over Digital: “(i) the synaptic weight are already mapped onto the NVM array (saves DRAM access energy), (ii) NxM matrix vector multiply can be done in a single time step using an NxM NVM array (performance improvement over digital) - and the improvement scales with the size of the NN layer”

Expo Workshop - Real World RL: Azure Personalizer & Vowpal Wabbit

Vowpal Wabbit

Notes:

- On-line (as in when data comes it updates the model) machine learning system

- Very extensible (similar to PyTorch and Tensorflow but for RL models)

- Built-in access to different datasets/RL environments

- Ability to convert pandas df to vw format

Azure Personalizer

Notes:

- Send request containing the places for product placement on webpage along with the importance metric for each of those and get a response with a personalized recommendation on what goes where.

- On-line learning enabling it to adapt to the user preferences and general trends. Uses CCB (conditional contextual bandit) for the model.

- Loss function defined by the developer to accurately measure what a positive interaction is and provide cost/reward to the RL system.

- Custom RL models can be defined and loss of all models can be compared.

- Easy to switch between models if a better one found.

Multi-Slot Learning - Slates vs Bandits

Notes:

- Used in the same context as Personalizer. We have certain contextual inputs (what are the positions for banners, what is the color of the banner etc.) which are conditioned on some other inputs (importance of position) and evaluated based on a global cost.

- Assumes that the slots are disjoint and linearly independent.

- Only single cost is available (how long the user looked at your

- website/did they buy suggested product/did they click on the link).

- Not integrated with Azure Personalizer currently but will be done after performance validation.

- Available as a part of Vowpal Wabbit

Continuous Actions in VW

Notes:

- Binary tree structure defined based on $2^n$ terminal nodes given as input.

- Each terminal node corresponds to a bin from the output range.

- $\epsilon$ greedy system used during prediction. Initially binary decision tree decides the bin to choose. Apply a smoothing parameter (bandwidth?) about the center of the slot where probability is higher.

- Sample randomly based on that distribution and use the reward to teach the binary network if the bin chosen was correct. If we smooth over several bins, feedback to the binary tree that any of those bins are fine!

- Smaller the bin size (larger the number of terminal node), more bias and lower variance in prediction

- Larger the bandwidth, higher the variance and lower the bias in prediction.